For more than a decade, I collected many pages with notes and calculations from my apprenticeship and studies. At the end of each semester, I sorted the handouts with my notes and stuffed them into a file folder. For some lessons from the beginning of my studies, I have only the printed scripts with my handwritten notes. Until today, most of these documents are stored in the attic at home and the important files are behind my desk where I can reach them quickly to look something up. Thanks to the sophisticated document structure, I always find what I am looking for within a short time. However, this system has two main problems. First, I can only search for something when I’m at home and second, the files cannot be duplicated and therefore they are not protected against destruction by a fire or something else.

This Christmas vacation I finally found time to digitize all those folders. The work was time consuming but certainly worth it as I now have all my documents digitized and can access them from everywhere. This post describes the most important points to consider when digitizing tens of thousands of pages.

Scanning the Documents

Scanning the documents sounds simpler than it is. Some papers are stapled together and others are grouped with paperclips. On some pages there are also Post-it markers with notes which would lead to a paper jam inside the scanner. Before scanning the documents, all those objects must be removed manually. Then the papers are separated into batches of about 200 pages. This is significantly lower than the maximum capacity of the automatic document feeder (ADF) of the scanner. These batches of 200 pages are a good compromise between processing time and the likelihood of a paper jam. Within each batch, the paper formats must be consistent. If the paper format changes, e.g. from A4 to A3 or from A4 to A5, then different batches must be made too. This is particularly time-consuming if there are many isolated A3 sheets in the A4 paper stack.

During the scanning process I had a lot of paper jam problems when I feed the paper as described by the scanner. In that case the worn-out and partly crumpled left side of the paper was feeded first. The wear is mainly caused by the paper clips, Bostitch staples, perforator and frequent browsing in the folder. When I rotated the pages 180°, I had fewer problems with paper jams, but in the scans everything was upside down.

After I figured out the best practice to scan the documents, I stored those settings in a customized profile. In this profile the resolution, the storage path and the double side scan property are configured. I can then select this profile from the home screen whenever I start a new scan procedure. This simplifies and speeds up the process, as all settings are loaded automatically. Furthermore, the possibility of doing misconfigurations is reduced.

I selected the maximum optical resolution of the scanner (600 x 600 dpi), which results in very large files. The scanner splits the pages automatically into PDF files smaller than 15 MB. Each scanned PDF file is named with the date and counter of scans and pages: Scan_<username>_30-12-2020_09-20-35_1663_006.pdf. For each subject, I put all the PDF files in a folder and named the folder according the topic. In total, I scanned 21414 pages from 93 subjects, resulting in a total storage volume of 42.6 GB. The number of pages of all PDFs can be counted with the following command using parallel to speed up the task on a multiprocessor system.

expr $( echo -n 0; parallel "pdfinfo {} |sed -n 's/Pages: */ + /p'" ::: *pdf|tr '\n' ' ')

Assuming that the scanner is able process 50 pages per minute (ppm) (duplex), it would take more than 7 hours for all documents. You can imagine that 50 ppm is almost impossible when the next batch should be prepared in parallel e.g. removing the staples, paperclips etc.

Rotating, Merging and Compressing the PDF Files

In the next step, the individual PDF files from the scanning process must be merged and rotated back by 180°. Moreover, the PDF files should be compressed to reduce the final file size. Various tools with graphical user interfaces are available for this purpose. As I wanted to do this process for each subject individually, I decided to implement my own program that performs the manipulation for me. This has the advantage that the entire process can be fully automated. I did some tests with PDFtk, Ghostscript and PyPDF2 under Linux. The simplest approach is to implement a bash script and use PDFtk to rotate and merge the PDF files. To compress the file size, I recommend Ghostscript.

The following command can be used to merge all PDF files who have a file name pattern like Scan_*.pdf to merged.pdf. The order of pages during merging can be specified with the ls command. One can test the ls command in advance to verify the ordering.

pdftk $(ls -v Scan_*.pdf) cat output merged.pdf verbose

The following command may be used to rotate the pages in the PDF file merged.pdf and safe it under rotated.pdf. The 180° rotation itself is specified with the cat 1-endsouth option. Unfortunately, I was not able to rotate a PDF file that was bigger than 2.1 GB. Therefore, it is recommended to rotate the single PDF file first and then merge them.

pdftk concentrated.pdf cat 1-endsouth output rotated.pdf verbose

Due to the large file size of the merged PDF, it is recommended to compress the PDF to reduce the file size. Ghostscript is well suited to reduce the large PDF’s into small yet legible documents. The following command can be used to compress the PDF file rotated.pdf into compressed.pdf.

gs -sDEVICE=pdfwrite \

-dBATCH -dNOPAUSE -dQUIET -dSAFER \

-dCompatibilityLevel=1.4 \

-dPDFSETTINGS=/ebook \

-sOutputFile=compressed.pdf \ # Output PDF file

rotated.pdf # Input PDF file

Finally, all commands are put into the bash script RotMergComPDF as shown below. Thereby, the scanned PDF files from one subject must be stored in a folder whereby the folder is named according to the <subject>. By calling RotMergComPDF <subject> the PDFs from the folder <subject> are rotated, merged and stored under <subject>.pdf. Moreover, a compressed PDF is stored with the file name <subject_compressed>.pdf. At the end of the script the temporary stored rotated PDF files are deleted.

#!/bin/sh

# loop over all specified subjects

for FILE in $@; do

FILE=${FILE%%/} # remove file separator from subject if existing

InputPath=$FILE'/' # add file separator to subject

ScanPattern='Scan_*.pdf'

RotatePattern='rotated_'

CompressedFile=$FILE'_compressed.pdf'

CombinedFile=$FILE'.pdf'

echo "Process subject: $FILE"

# rotate all PDF in the subject folder and store them temporary

for i in $(ls -v $InputPath$ScanPattern); do

pdftk $i cat 1-endsouth output $(dirname ${i})/$RotatePattern$(basename ${i})

done

# combine the rotate PDF files

pdftk $(ls -v $InputPath$RotatePattern$ScanPattern) cat output $CombinedFile

# compress the PDF file

gs -sDEVICE=pdfwrite \

-dBATCH -dNOPAUSE -dQUIET -dSAFER \

-dCompatibilityLevel=1.4 \

-dPDFSETTINGS=/ebook \

-sOutputFile=$CompressedFile \

$CombinedFile

# remove rotated files

find . -name $RotatePattern'*' -delete

done

To speed up the conversion of more than one subject, they can be specified as multiple arguments, like in the following command. Thereby each subject <subject_i> contains a folder with the scanned PDF files.

RotMergComPDF <subject_1> <subject_2> ... <subject_n>

The following command may be used if there are too many subjects to specify by hand like in the previous command. The subjects are selected on the basis of the folder names using the find command.

find . ! -path . -type d -exec ./RotMergComPDF {} +

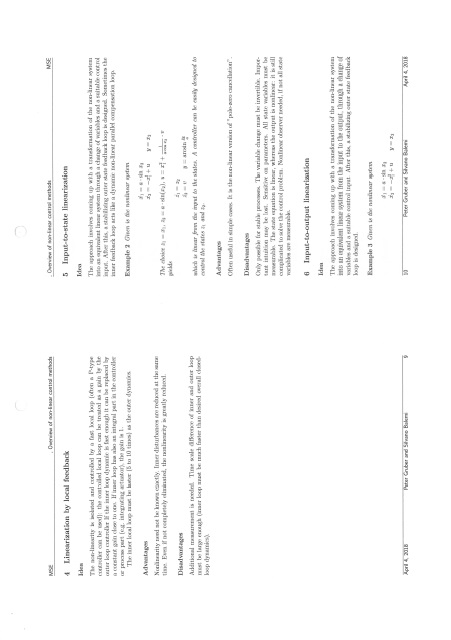

When viewing the processed PDFs, various flaws stand out. Since double-sided scanning was always used, the PDFs may contain blank back pages. This is especially annoying when scrolling through the pages. Therefore, the blank pages should be removed from the PDFs. To minimize printing costs, sometimes two pages were printed on one sheet, which leads to wrong rotations when using this script. The following image shows such an example for a sheet that contains two pages and was therefore rotated incorrectly.

What’s next?

In the next step, the scanned pages could be classified according to the following properties.

- orientation (0°, 90°, 180° and 270°)

- if the page is empty

- if there is one page or two pages on one sheet

This would be helpful to rotate all pages correctly. Furthermore, empty pages could be removed and if there are two pages on one sheet the sheet could be splitted into two single pages. As the documents are now digitized optical character recognition (OCR) could be used to convert the printed or hand written text into machine-encoded text. This would especially be helpful to search for words within the documents.

Summary

In this post I described how I digitized all my study notes with a scanner and how I process the scanned pages with a bash script and put them into a PDF file for each subject. This project was long overdue, as it allows location-independent access to all my documents, and further protects them from destruction. Now that I have digitized the school’s documents, I can get rid of the real ones. If I have time, I will try to improve the PDFs further by removing blank pages and rotating the pages according to the text on them. I will also attempt to convert the printed text to machine-encoded text to allow searching for keywords in the PDF viewer.